Vote by Sharing

Unite 100 000 Women in Tech to Drive Change with Purpose and Impact.

Do you want to see this session? Help increase the sharing count and the session visibility. Sessions with +10 votes will be available to career ticket holders.

Please note that it might take some time until your share & vote is reflected.

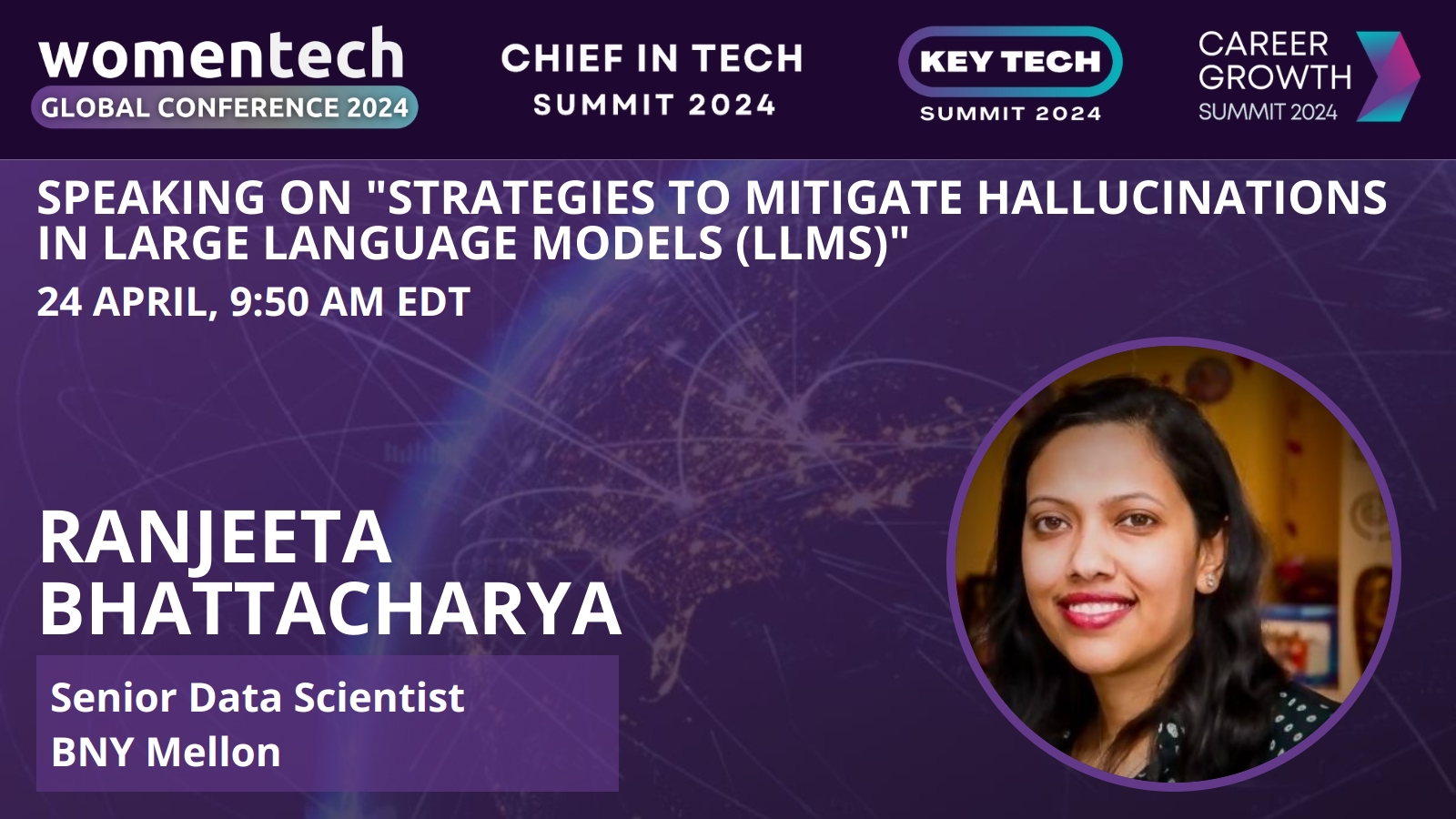

Session: Strategies to Mitigate Hallucinations in Large Language Models (LLMs)

With the rapid advancement of Generative AI capabilities, numerous possibilities arise for its utilization. In this discussion, I will focus on a crucial aspect of constructing and applying generative AI for enterprise-level applications: mitigating hallucinations in large language models. Through this talk, I will thoroughly explore various methods aimed at addressing hallucinations in Large Language Models (LLMs), a specific type of Generative AI model. The generation of factually inaccurate information can occur both during the initial development of LLM models and the subsequent refinement of existing model responses through prompt engineering. I will delve into diverse approaches to mitigate these issues, including the introduction of new decoding strategies, optimizations based on knowledge graphs, the incorporation of innovative components in loss functions, and supervised fine-tuning. Concurrently, I will explore methods such as retrieval augmentation, feedback-based strategies, and prompt tuning, which can be implemented during the prompt engineering phase.Key Takeaways

- Understand Generative AI and its latest trends. Evaluate latest Gen AI models. Understand how Generative AI models can hallucinate. Understand how to mitigate hallucinations.

Bio

I'm a senior data scientist within the AI Hub wing of the world's largest custodian bank. As a machine learning practitioner, my work is intensely data-driven and requires me to utilize my cognitive ability to think through complex use cases and support end-to-end AI/ML solutions from the inception phase till deployment. My total experience as an Analytics / Technology consultant spans over 15+ years where I have performed multi-faceted techno-functional roles in the capacity of software developer, solution designer, technical analyst, delivery manager, project manager, etc. for IT Consulting Fortune 500 companies across the globe.I hold an undergraduate degree in Computer science and engineering, a master's degree in data science, and multiple certifications and publications in these domains, demonstrating my commitment to continuous learning and knowledge sharing.