Designing UX for future products

Designing User Experience (UX) for Future Products: Insights from Liz Swen Beg

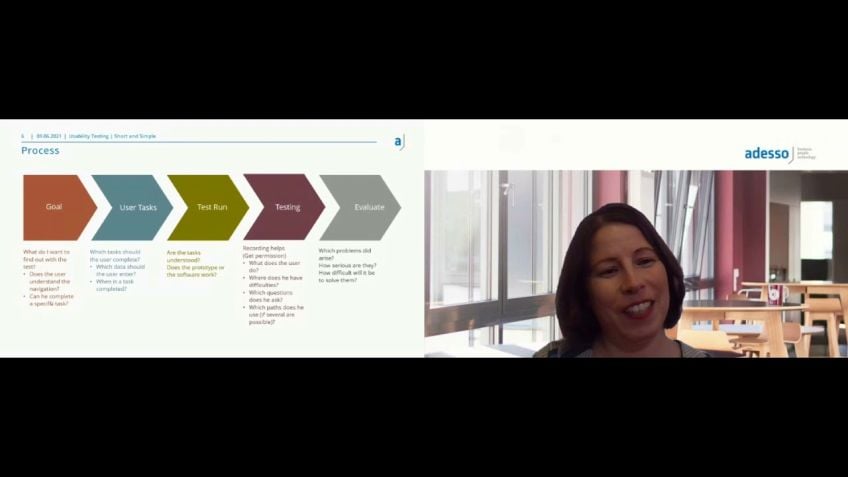

Liz Swen Beg, the head of design at D BT and a PhD candidate in Information Design, is dissecting the concept of designing UX for future products. In her engaging talk, she brings to the limelight a host of strategies, sharing examples of futuristic digital technologies, and the ethics that underpin UX design for the future.

About Liz Swen Beg

From South Africa, Liz is a design enthusiast who is currently juggling her job as the head of design at D BT with her PhD in Information Design. A self-professed lover of adventure, Liz has explored 22 countries, and appreciates the world for its diversity. Besides her professional pursuits, you could also find her playing the violin in an 1800s cover band.

Understanding The Current Designing Scenario

As Liz emphasizes, while creating products and brainstorming ideas, designers always need to consider the user's real world scenario which often involves varying light conditions, noise, and various other distractions. These unconventional situations allow so much scope for UX design to thrive and create a difference in people's lives.

Interactions of The Future

Future-oriented product design tends to evolve beyond screens to the realm of experiences. Designers need to mold their creativity to develop technologies that can enhance experiences and interactions in everyday life. Let’s examine a few of these technologies through the lens of Liz Swen Beg.

Augmented Reality (AR)

AR technology overlays information onto real-world scenes. For instance, the John Hopkins neurosurgery department conducted their first AR surgery, where the solution helped surgeons to clearly visualize the operation area in real-time.

Virtual Reality (VR)

Some may argue that VR is just escapism, but its medical applications, especially for children undergoing treatments, prove this to be untrue. The technology allows children to play games, insulating them from the fear usually associated with clinical procedures.

Voice Interfaces

Voice interfaces are instrumental in representing users. Google Assistant, for instance, now allows you to delegate phone call tasks to it based on specific parameters you set.

The World of 'Smart' Everything

In this era of digital transformation, we see the advent of “smart” products almost everywhere, from Bosch’s sensor-inspired, automated laundry services to IKEA’s smart table that provides numerous functionalities like measuring ingredients, providing recipe suggestions, etc.

Gesture Interfaces

Moving a stuff just by a wave of a hand might sound like a fantasy, but the advent of gesture interfaces like Google Soli project is making it a reality. For instance, gesture control in BMW cars enables simple hand motions to regulate various vehicle controls.

Internet of Things (IoT)

The IoT connects everything with each other, aiding them in cooperation to enhance the overall experience. From a sensor detecting moisture in the soil and scheduling garden watering accordingly to an Amazon security drone connected with Amazon Alexa to monitor house security, interconnected technology is simplifying our lives.

Brain Interfaces

This is a novel field where brain activity controls interfaces. Elon Musk’s Neuralink is imploring this concept, wherein a sensor implanted in the brain connects to the phone via Bluetooth – for instance, enabling monkeys to control games with mere thoughts.

The Importance of Ethical Design

Liz emphasizes that product development must consider ethical design, which involves diversity, inclusion, sustainability, and safeguards against misuse of the products.

Concluding Thoughts

In Liz's view, the future has indeed arrived and it's time for designers to step up and incorporate these technologies into the products and solutions they create to enhance the user's interaction and experience. As technology advances, it is clear that UX design is not just about designing the user-interface, but also considering the larger ethical implications it has on society.

Video Transcription

Thank you so much for having me. I'm Liz. Um Swen Beg, I'm from South Africa and today I'm talking about designing UX for future products. So just a little bit about me, I'm the head of design um at D BT. We're a custom software um consultancy.I am currently busy with my phd in information design, looking at health humanities. I'm hoping to have it done within the next few months. Uh I'm based in Pretoria in South Africa. I play violin in the 18 hundreds cover band, which is essentially just a symphony orchestra and I love adventure. I've traveled to 22 countries so far and really hoping that the world gets back to normal sometime soon so that I can go and visit uh quite a few more interesting places. So if you want to talk about designing UX for future products, I wanna first look at designing UX and uh where we find ourselves in this space at the moment as designers or as people creating products, when we're designing things or creating, coming up with ideas, they are very often comfortably seated at our desks looking at, you know, some nice lighting brightly lit room, it's relatively quiet, um and often no disturbances.

Now, these last two might have changed a little bit during COVID times with uh family at home. But we tend to design an ideal situations and we get the time to focus on this thing that we have created. Now, when they actually create, when people are actually using our product in the real world, the lighting varies. It can be very bright or very dark. It's often noisy. People can be using the product while they're walking or dancing and they can use it in very unexpected ways.

So in real life, there are very few ideal conditions that they actually get to experience uh while creating solutions. So when it comes to the future of UX design, I found this quote while I was researching this talk, which I found very uh poignant that the future of UX design is not on the screen. So if we look at those situations that are not ideal conditions, they're situational and more and more, we're starting to see UX design and experiences being translated into the real world and products being created that will help to affect people in the real world. Uh Now I'm going to start look at these future products that we talk about. So when you think about future products, these are some examples I found on the internet, we don't think about screens, we think about experiences. Um you might recognize the bottom, right hand and one the uh uh knife, toasting the bread as it slices from Hitchhiker's Guide to the Galaxy, one of my favorite movies, um these are all experiences that start to be considered. So when we think of designing for future products, we need to start thinking about wider than screens and situational uh instead, so we start to look at how these different technologies can help to enhance our experiences and our interactions.

So that's what I'll be looking at today and giving some examples that are currently being used in the world or examples of where people wanted to go. So the first one, augmented reality, I'm sure we are all familiar with this. You look at the real world and you overlay something on top of it. Now, this is an example from John Hopkins uh neurosurgery department in America. Uh They performed their first uh augmented reality um surgery end of last year, which was a spinal fusion where the doctors actually wore these helmets that could help them to see uh in real time the operation that they were performing that without the interference of um you know, uh blood or whatever it might be, that might be in the way.

And this assisted them greatly to see the angles to see exactly where things needed to go. Another one we're all familiar with virtual reality. So full immersion into a experience. This is also being very successfully used in medicine and particularly for Children actually. So when Children are getting injections or vaccines or treatments for various things, they can maybe play a game or the nurses time it so that the game the kids are playing relates to the treatment that they are getting. For instance, if they need to get an injection, the game might be someone stabbing them with a sword or um something like that so that it's playful and in context and it's no longer scary and intimidating for them. Now, another one, I'm sure we're all familiar with voice interfaces. Now, there are a variety of these in the world at the moment, most of the big software companies have their own version of it. And the exciting place where this starts to get used is you can use these interfaces um to actually interact with other people on your behalf if you're like me and you really hate making phone calls, um go assistance has this feature, this was demoed uh three years ago in 2018 at a conference already where you can ask your Google assistant to uh make an appointment on your behalf and you give it the parameters and it continues to do that.

It's really interesting and it's being continuously developed um by the Google team to uh use this in more and different ways. Now, something that starts to get a little bit more futuristic that we might be a little bit less familiar with is basically smart. Everything we're making everything smart nowadays. And this is an interesting example by Bosch, uh where they um created this concept that you might be able to uh in your cupboard. Have the sensor that then detects. Are you running low on shirts? Are you running low low on laundry?

Then you can tell it, ok, send my clothes to laundry or buy a new one and this might be a little bit of a first world problem, but it's an interesting application. Now, another application that's really fascinating. It's still in concept phase. Unfortunately, is the smart table by IKEA. So I'll show you the quick video so that you can see um all the different possibilities that they've come up with. For this. The

table brings inanimate objects to life using smart light. A camera observes what happens on the table and relevant graphics are projected back onto the surface. This kitchen table is about more than just dining. It is your preparation service, hob workbench and children's play area.

This explore creative flavor combinations, get suggestions on what to cook based on things you already have at home. Be inspired by any tempting recipe set, the timer to decide how much time and effort you want to spend place ingredients on the cutting board for advice on how best use the built in scales to get the proportions right? For does and batters. I Yeah.

OK. So I love cooking and I for one would absolutely love a table that could do this. For me, it's measure things to give suggestions, et cetera.

A table brings animate.

Now, next example, we're going to look at is gesture interfaces. This might seem very futuristic, but it's actually currently being used in quite a few applications. So the first example I'll show you is the Google Soli project. Um It uses radar to actually detect your finger gestures and this is a video from a few years ago. Um The current application is in the new pixel phones where the pixel phone can detect gestures and actually uh answer phone calls or change music depending on that.

We can sense these interactions and we can put them to work. We can explore how well they work and how well they might work in prudence.

Blows your mind. Usually.

Uh I completely agree. It blows my mind. I, I think it's such an innovative way of interacting with devices. The other uh example where this is currently widely used is in cars. BMW is quite a front runner of this and they have gesture control in their cars, which is very useful when you don't want to look at a screen to change your music or to look at navigation

BMW vehicles equipped with gesture control, enable some functions to be operated simply by moving your hands. A camera in the roof lining detects gestures made under the rear view mirror in front of the control display. When you, for example, move your hand in a circle with only your index finger pointed forward, you can change the volume, turn clockwise to increase the volume, anti clockwise, to reduce the volume means you fingers and extend them form. This gesture can be individually

to a function.

You can always to a next song or to a previous song integrates with computer vision, which is related to machine learning artificial intelligence, which is becoming more and more prominent in these features as it's allowing for personalization and for interaction with the real world.

Now, I'm sure you've all heard of the internet of things. So next, we'll be looking at connected everything, how every health relate to each other and how our products can actually speak to one another. So an interesting example uh this is by hydro point where it can actually detect the uh moisture in the soil in the soil, sorry through a essential, then it connects to your phone and it detects the weather patterns and based on that, it decides by itself when it needs to water your garden for me who's constantly over or under watering my plants.

This would be a uh an absolute save. Another example is the Amazon ring security drone. Uh This is connected to your Amazon Alexa. When you leave the house, it activates and if it detects a noise somewhere in the house, it pops up, flies to the spot and you can see via your phone, it alerts you that something might have happened that you should be paying attention to, again, a way that different products are interacting with each other.

Something that you don't have to manually do. And it's just making our lives a lot simpler and easier. Now, the one that really excites me and that really seems very futuristic. It's actually brain interfaces where you can use your mind to control an interface. Now, a company that's quite a big forerunner in this space is neuralink. Uh I'm sure it won't come as any surprise that this is Elon Musk's uh baby as he has such many interesting spaces that he's working in and they're really making big strides towards um this technology. So the way that it would work is you implant a Neuralink sensor in one or both brain hemispheres and it connects via Bluetooth to your phone. Um These are some um videos on their websites of how they foresee this um working. So these are concept videos still. So you would think about moving the cursor and you would eventually be able to get this right now. I'll show you just now how they've been able to actually implement this, but you'd be able to play games with it. You'd be able to think about how um you interact with this. Now, very recently, last month, um they released a video where they had actually implanted two neuralink devices into a nine year old Maka monkey's brain and had him performing tasks with it and they show how they trained him. So I'll show you that.

So you can see the real example of how this is being applied.

Pager uses the joystick to move a cursor to targets presented on the screen as he's playing this game, we are wirelessly streaming in real time the firing rates from thousands of neurons to a computer using these data, we calibrate the decoder by mathematically modeling the relationship between patterns of neural activity and the different joystick movements they produce.

After only a few minutes of calibration, we can use the output from the decoder to move the cursor instead of the joystick pages still moves the joystick out of habit. But as you can see it's unplugged, he's controlling the cursor entirely with decoded neural activity. Our goal is to enable a person with paralysis to use a computer or phone with their brain activity alone.

So then they took it a step further and they trained the monkey how to actually play pong. Um using only his mind.

One of the things the neuralink allow pages to do is to play his favorite video game pong to control his paddle on the right side of the screen. They just simply thinks about moving his hand up or down. We've removed a joystick altogether. Now that he's up to speed, let's increase the difficulty and see how well paid you can play with the neuralink. As you can see pager is amazingly good at mind upon he's focused and he's playing entirely of his own volition.

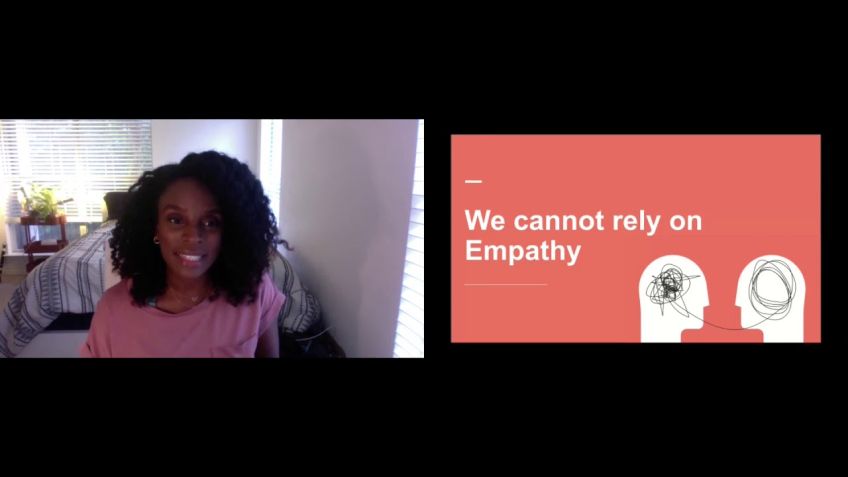

I definitely think Pao is a lot better at Pong than I've ever been able to be. Um So the application of this is absolutely incredible and I think there's a lot of potential to see where it can go. Now, looking at implanting things in people's brains, it's important to think about the ethics that this actually contains. So just a brief discussion about ethical design when it starts to come to very futuristic um products, sorry, just notifications on my screen. Um It's important to consider diversity and inclusion when we are designing. Uh it is pride month at the moment. So happy pride, everyone. Um And we need to think about how we're going to include people that are different in our designs, in our teams and then who the products that we're creating for whether this is a difference in terms of sexuality, gender, um disability, neurodiversity, whatever it might be. We need to think about diverse teams that we are including and that we are creating solutions for. We also need to look at sustainable design. Um As designers, we are very tempted to fall into the mindset that Human Centered design is everything where in reality, we don't, we aren't just humans on this planet. Um And the world doesn't revolve around us.

We need to think about ecosystems, we need to think about life systems, we need to think about how we interact with other people. It doesn't help we create a solution that has a beautiful human centered um design for the interface. But the product itself is not sustainable. So we need to be careful about what we do because we as designers actually have a lot of power and a lot of responsibility for the things that we create. Then lastly, I'd like to ask everyone to do a black mirror test with the products that you're creating. For those of you who are unfamiliar black mirror is a uh series on Netflix. And each episode usually details a type of dystopian future where technology in some way has messed up people's lives, quite frankly. Um Very good series would recommend. And the idea is that when we bring this into our world, you need to try and think of the worst way that someone could potentially use your product or service and how you can try to combat that. For instance, um If you have a joint banking account with someone very um often a bank will only allow one person to have access to that with me and my boyfriend. For instance, I have the only access to the app to um the account, which means that financial abuse can very easily uh occur in such a situation. And we need to design against that and think how we can help people avoid these types of situations.

Then lastly, I would like to show you a video by um Satya Nade. He's the CEO of Microsoft and this is a product that um sorry, this was his keynote talk at the Microsoft BUILD conference that happened two weeks ago. And it's just a really beautiful representation of everything that we've spoken about how interconnected technology is becoming and what, what we can actually strive to as the example that he's talking about in this actually already exists.

And George will share one of my favorite examples of how Anheuser Busch Inbev has used our meta stack to track every bottle from the wheat field through the manufacturing and distribution processes. They've created this complete digital twin of their breweries and supply chain.

As you can see here, the digital twin is synchronized with their physical brewery. It understands these complex relationships between equipment and natural ingredients. It enables the brew masters to make adjustments based on dynamic conditions. It maintains up time on many machines required during the packaging process.

It tracks the entire supply chain to reduce emissions. Deep reinforcement learning works with digital twins to help packing line operators detect and automatically compensate for bottlenecks. They even use mixed reality with their digital twin for remote assistance.

So in conclusion, um this might sound very cheesy but the future has arrived. The future is here and we need to start thinking about how we can incorporate these into the products and solutions that we create. Thank you very much. Um please find me on linkedin or on Twitter. Um I post very silly things. Um And I like to talk about UX design in not sure if we're actually going to have um time for questions, but I will be around to ask questions if you would like to ask more questions. You are very welcome to. Um As I said, find me on linkedin or Twitter. Uh I'm always very happy to chat. Um You can also find me just on the this platform. Send me a message. Um Yes, happy to chat. Um I see if I've asked if you can get the slides, I will be publishing this as a um a medium article. So if you fo follow me on linkedin or Twitter for the third time, then uh you'll definitely be able to get it. Um I completely agree that gestures aren't necessarily accessible to everyone and that's why we need to think about how we can actually allow for more people to have access to this. Um We need to think about whether the solutions we create are accessible for different kinds of people.

A disability isn't necessarily someone who doesn't have a hand or an arm or who can't use their hand or arm. It could be a temporary disability such as mother carrying her child, she can't use her arms. So you need to think about how you can make more things accessible. Um I'm not sure if they're going to, I'm gonna keep talking until they kick me off the platform. Um Basically, so any questions uh Rebecca had a question about gesture interfaces, the gesture for tuning, turning up and down the volume is similar to people who grew up with knobs. But I could imagine it's not intuitive or become unintuitive for younger persons who are not familiar with the phy physical counter piece. And how can we address that to make sure that every user can interact with the product? It's a very good point. Um I think that if you think of early interfaces uh when Apple's iphones started coming out the way that interface was designed was very ske mic. In other words, it very much replicated things in the real world, the notepad um solution that you could uh type things in, it looked like a yellow uh lined notebook. Um the place where you could read your ebooks, it looked like a bookshelf.

This was a way of bringing in the real world so that people can identify um things that they're familiar with in this new interface. Now, you'll have noticed that as we become more digitally literate and more um advanced in our design, we are moving away from physical representations because people are becoming familiar with the interactions themselves. Now, I think that's why they originally looked at the physical interactions, like turning a knob to increase or decrease volume. Um And it's a good question, are people going to learn that behavior? Are they going to be familiar with this or do we need to start introducing other gestures? It's also important to remember that, you know, from a cultural point of view from. Um, and, and the countries that we live in gestures mean different things in different countries. I'm sure you've all experienced something like that. Um Where things mean different things in different countries and we need to become used to that And we need to consider that we can't just implement things like gestures universally because it is very different in very different countries.

Um Let's see. So yeah, I think it really will depend um on what people get used to and what gestures people become comfortable with. I think gestures might also start to become standardized across um uh different platforms in the same way that we have standards for app design and for web design. But it will depend, it could also be that each platform. So for instance, BMW cars might have a specific standard that they all adhere to, but other cars might have completely different standards because they're a different company. So it will be interesting to see if in the same way that you have people that are used to android interactions and people that are used to I OS interactions, you start to have people that buy gesture products or whatever else, it might be based on the gestures or the interactions that they are familiar with.

Um Yes. Um Sara is saying that she's um shocked the joint accounts only had access for one person. Um I remember being in a talk where someone mentioned that um for her and her boyfriend, uh they also had a joint account and they needed to answer security questions every time they entered. Now the account, her boyfriend was the main account holder. So all the security questions were his. So there was literally no way for her to access it without asking for his input. Things like Amazon and ne A. Um if you think about it, it has the option to drop in um to the speaker via your phone without alerting anyone that this is happening. So it's actually possible to spy on people within your house without them realizing that people are listening to you. So in most cases, people would just use it as an intercom to, you know, quickly talk to someone on the other side of the house, but it could very easily be used for nefarious purposes. And we need to think about these things and think how we can counteract that any other questions guys. OK. Thank you so much for having me. Um I really had fun and I hope you enjoy the rest of the conference. Um Please do find me.

I love chatting and um enjoy the rest of your week. Bye.